The author of a book calculating the possibility of the end of humanity says that natural risks like volcanoes and earthquakes have been dwarfed by man-made threats.

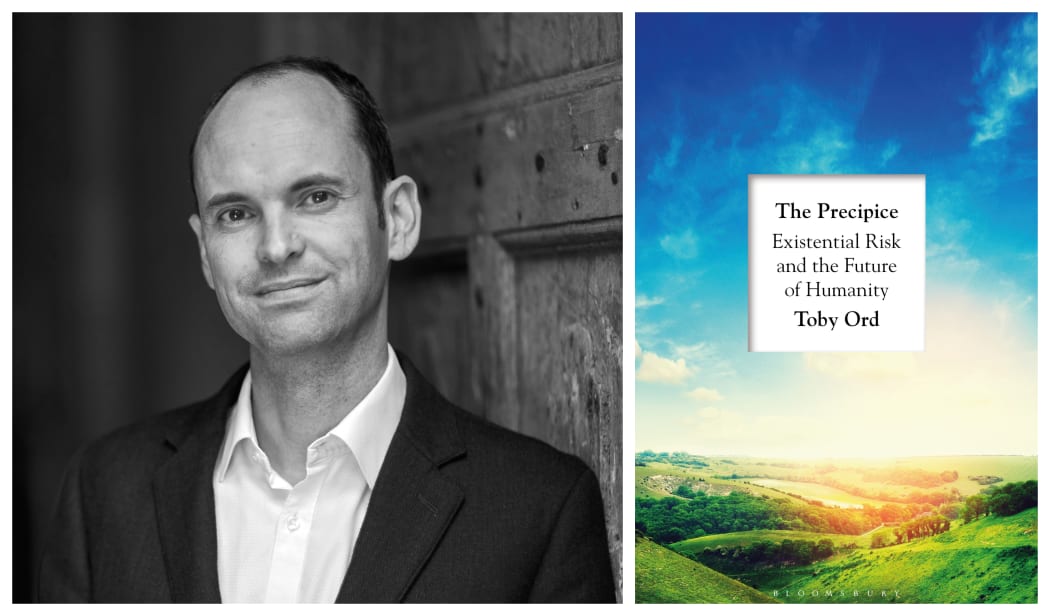

Toby Ord / The Precipice Photo: supplied / Fisher Studios Ltd / Bloomsbury Publishing

At the same time as the Covid-19 pandemic began sweeping the world, Australian moral philosopher Toby Ord released his book calculating the possibility of the end of humanity.

In The Precipice, he weighs up scenarios that could contribute to our downfall. From the man-made threats of climate change and nuclear war, to the potentially greater, more unfamiliar threats from engineered pandemics and advanced artificial intelligence.

Ord - who is also a research fellow at the Future of Humanity Institute, and a Senior Research Fellow in Philosophy at Oxford University - told Kim Hill that humans wouldn't have survived for so long if natural risks were that high, but that argument only worked for risks that had not changed over time.

"So risks like asteroids - if anything, they've gone down due to our ability to prepare for them ... we can see them coming now," Ord said.

"We think that we've seen 95 percent of those that could pose a threat to the Earth and have found that none of those that we have seen are likely to pose a threat any time soon."

If such a threat from an asteroid was detected, the consequences could be akin to "a nuclear winter", he said, which was what killed off the dinosaurs.

"The skies would get blackened out by dust and crops would fail. So if we had a few years to prepare, we could grow extra food, eat less, and store up big supplies."

Human activities also increase the amount of new pandemics we face and makes them easier to spread, he said.

Staff members receive the novel coronavirus strain at a laboratory of Chinese Center for Disease Control and Prevention in Febraury, 2020. Photo: Liu Peicheng / Xinhua / AFP

Of particular interest to Ord is the security surrounding dangerous pathogens.

"It's quite shocking if you look at the history of labs where people researched dangerous pathogens. The last death of small pox in the world was in Britain, after it had been eradicated in the wild, where it escaped out of a lab. There was a major foot and mouth outbreak - a disease among livestock - and the most recent big outbreak in the UK escaped from a research lab, in fact of the BSL-4 [labs] of the highest security level.

"I think the final case of SARS was also a lab escape. So it actually does happen quite a lot which makes it particularly scary if we have scientists in some cases making pathogens more deadly. This happened with bird flu, where researchers in the Netherlands tried to make it transmissible between mammals, whereas previously you could only catch it from birds."

While researchers in those cases may have good intentions, it was nevertheless a difficult balancing act to weigh up the risk of the research itself versus the risk posed by the pathogens, he said.

The threat from Artificial Intelligence

In his book, he predicts there is a one in six chance that humanity may not make it to the end of the century.

However, it is not a scientific number based on elaborate calculations or data sources, but rather Ord's judgement call.

"Ultimately, I think that there are a number of risks that are substantial. I think that the existential risk posed by nuclear war and by climate change is something like one in a 1000 chance that humanity ultimately fails this century. I consider that to be a substantial chance but still probably not going to happen," he said.

The remains of burnt out buildings are seen along main street in the New South Wales town of Cobargo on December 31, 2019, after bushfires ravaged the town. Photo: Sean Davey / AFP

"I think when it comes to engineered pandemics, I put that it as a 3 percent chance, so one in 30. When it comes to advanced artificial intelligence, I put it even higher about one in 10, and when I take this altogether with a few other risks I come to about one in six [chance]."

The reason why advanced artificial intelligence took a higher chance in his estimate was because he sees it as the one thing that could hinder what has helped humanity get so far and what sets us apart from other species - our mental abilities.

Photo: 123RF

"AI researchers are working on making systems that are more intelligent than us - that have more of these cognitive abilities and that are better able to do these things. So far, it's been mainly on very narrow tasks where it can exceed human abilities at calculating, or logic, or chess or Go. But we're increasingly trying to move to more general systems, like the science fiction conceptions of a robot or AI that can do all the kinds of things that humans would do," Ord said.

When AI scientists were asked for their predictions about when that could happen, he said they were uncertain but the likelihood of that happening could be in 50 years.

"And they also thought there was a significant chance that this could be an incredibly bad outcome. I think you can see that if you think about the question of what makes us in control of our destiny - well we would be kind of handing over this unique position as the most intelligent entities in the world to our creations."

He reached that figure by breaking it down to a one in two chance of developing the mentioned AI systems, and a one in five chance of not surviving the transition when we create them.

"I think we'll still be figure out how to control them or align them with our interests but there's a serious chance that we can't neglect that we won't be able to."

Even if we were to think about simply turning them off, another sinister possibility of them resisting that attempt was plausible, Ord said.

"You don't need that much intelligence to start to form intentions to deceive if it's the best way to achieve your goal."

Extinction might not be our only end

An existential risk could be any event that destroys the long-term potential of humanity and future generations, Ord said.

This NASA handout obtained October 2, 2019 shows a photo by astronaut Nick Hague as as he prepares to conclude his stay aboard the orbiting laboratory. Photo: Nick Hague / NASA / AFP

If instead of extinction there was an event that destroyed civilisation, then our potential would also be crushed in a similar way.

"Because there's this irrevocability about it, they all have similar properties when you're trying to understand them. For example, it's crucial that these things never happen even once in the entire lifespan of humanity, so we don't have opportunities to really learn from trial and error when it comes to existential risk and this creates immense challenges."

While many have pondered about going intergalactic to escape the risks on Earth, Ord believes we still have a vast amount of time remaining on Earth, it's just that there may be an even longer time to survive on other stars.

And it may even be advanced Artificial Intelligence that would help launch us to that place, he said.

"If we saw that a lot of the risks that we face are technological in origin and we responded to that by forever renouncing technology, I think that in itself may be an existential catastrophe, because it's very plausible that to truly to live up to humanity's potential that we do need additional advanced technologies, and AI might be one of them."

There was no one answer to complex situation, he said, adding that he has left it open to readers.

Either way if we become the first generation to break the chain in the passing down of "seeds of potential", Ord said "we would be the worst generation that ever existed."

It was crucial to devote resources to ensure we do not fail the future or past generations, he said.

Existential risk is one of the causes prioritised in the 'effective altruism' movement, which Ord co-founded in 2009 with William MacAskill, focusing on global poverty, future generations and animal welfare. It encourages people to use evidence to help others as much as possible.

It also forms the basis for his international society called Giving What We Can, whose members have pledged more than $1.5 billion.

Ord said all members commit to give 10 percent of their income to helping those less fortunate, and in addition he had also personally committed to give above a certain amount (£18,000)

He believes that if global poverty was to no longer exist at the current levels it was it now, then people would look back and be dumbfounded by the moral paralysis of people.

"I do think we can get past this time of extreme poverty, the likes of which we see on our television screens, and I do think people will look back and say 'wow that was a time when people could really do amazing things for others, imagine living at such a time'. Like if you watch TV and you think about the citizens living in Nazi Germany and the opportunities of everyday heroism that existed - I think we'll think of it a little bit like that."